Managers are under more and more pressure to use AI for crucial decision-making, risk management, and competitive advantage in the fast-paced business world of today. Widespread adoption of AI is complicated by issues like fast changing data, excessive noise in datasets, and the requirement for scalable solutions.

By concentrating on particular sectors and tasks, domain-specific AI tackles these problems. It provides customized solutions by effectively collecting and evaluating pertinent data and may be adjusted to fit specific customer use cases. Because of its specialization, Domain-Specific AI is an effective instrument for achieving success in challenging business situations.

Table of Contents

Domain-Specific AI vs. General AI models

A broadly defined Artificial Intelligence is both general-purpose and domain-specific, with each having its own use. General AI models do more at once; they handle a lot of data, but do not quite manage the more specialized aspects of certain industries and job functions.

Domain-specific AI is suited for precise needs, which inspires speed in deployment and pinpoints functionality in customer cases. While a generalized image recognition algorithm, for example, identifies humans, animals, and objects, a manufacturing-specific AI application detects defective packaging items on an assembly line. Both are top-end, but the latter delivers specialized solutions critical to the specific industry.

It can act as a narrator while generating content; for instance, a universal content-generating artificial intelligence can write essays and poems. A domain-specific model that learns from toxicology will generate chemical safety reports for the energy industry. All these examples show how domain-specific AI is merging human farm-trained experience to give targeted and efficient solutions; she is about making a difference in all sectors.

The Benefits of Domain-Specific AI models

The main benefit of domain-specific AI is that it makes accurate and speedy results and scales for businesses. They are effective because they are a replacement for general purpose AI cannot handle most of the tasks in their pre-trained data across industries.

- Much Higher Accuracy: Domain-specific tools like Spreadsmart can achieve an accuracy of 99% as compared to traditional, manual methods.

- Faster Deployment: Automation platforms can deploy customer analytics models in weeks rather than the months required for custom-built solutions.

- Broader Data Access: Collaborating with multiple clients within an industry enables the development of robust models, even when individual clients lack sufficient data.

- Effective Use of Training Data: These AI systems are designed to function efficiently in their own domains, bypassing restrictions on training datasets.

General-purpose AI usually fails with regard to domain-specific challenges due to being extremely expensive, data, or rapid scalability. Conversely, domain-specific AIs would be an economically rational and emergent solution for all strategic resorting to operational excellence.

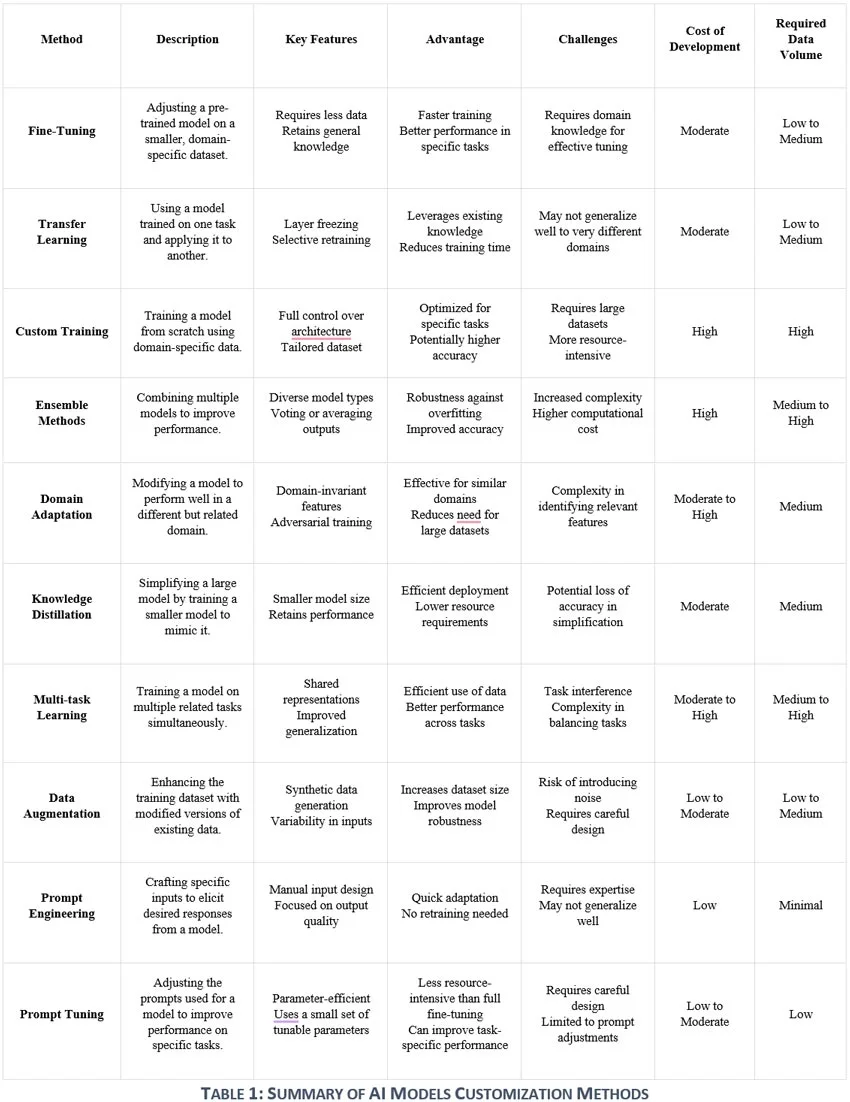

Some Ways to Customize Pre-Trained Models

There are things businesses and startup companies can do for efficient domain-specific AI solutions without the huge cost of building AI models from scratch: there are several ways to customize pre-trained AI models:

1. Fine-Tuning

This fine-tuning process will adapt a pre-trained AI model to a specific task by substituting just certain parts of the model for domain-specific annotated data. Its foundation continues leaning upon the earlier knowledge encoded along with the model’s overall accuracy, improving its specific applicability. Although this strategy is very successful, it runs the highest risk of becoming overfitted. Here falls techniques that can be completely targeted to updating embedding layers, language modeling heads, or even the entire model. Newcomers such as instruction tuning enhance this further for flexibility and, thus, make fine-tuning an important method to develop custom solutions for systems.

2. Prompt Engineering

Prompt engineering is the art of guiding pre-trained models with well-designed text inputs, or prompts, to elicit responses for a specific task. With this approach, there is no need for retraining, which makes it a fast and flexible process of adapting a model into multiple possible tasks. However, the expertise and effort involved in crafting one is considerable, and their results may not reach that of fine-tuned models. Regardless, prompt engineering remains an efficacious method to expense frozen models across their solutions.

3. Prompt-Tuning (Optimization)

Prompt-tuning is an excellent method for optimizing task-specific performance using prompts as trainable parameters. This contains elements of both fine-tuning and prompt engineering, but, in terms of resources, it conserves them by only varying the prompts instead of retraining the entire model. Some variations of prompt-tuning include discrete prompts whereby text is used as an input, while continuous prompts operate in the embedding space. Prefix tuning and hybrid tuning are more advanced and flexible techniques to make this method even better.

4. Reinforcement Learning from Human Feedback (RLHF)

Reinforcement Learning from Human Feedback (RLHF) is a modality of fine-tuning AI models to human-wise compatibility for reinforcement learning-based techniques flourishing. The whole process involves pre-training, followed by the making of a reward model out of human feedback, and ending with fine-tuning via reinforcement-learning algorithms. The progress behind RLHF is now associated with advanced models such as GPT-4 in considering the compliance of models with human values and reducing their biases or inaccuracies.

5. Transfer Learning

Transfer learning transfers a learned model from one task to a different but related one by freezing certain layers and selectively retraining the rest. It exploits past learning and saves time in training while maintaining very good performance in similar domains. However, transfer learning might be ineffective in generalizing to entirely different tasks. Transfer learning is very cost-efficient and doesn’t need much data, making it feasible for the problem of reusing AI models across related applications.

6. Knowledge Distillation

The process of knowledge distillation allows for simplifying complicated designs through smaller models performing identically as the original. This, thus, allows for the deployment of complex models with much lesser computational requirements while maintaining a significant portion of the original model’s operation. However, there is some degree of accuracy loss regarding simplification. Considering moderate costs and medium data requirements, knowledge distillation effectively helps in developing lightweight but sufficient AI models.

7. Domain Adaptation

Domain adaptation is a process of fine-tuning pre-trained models for new tasks in a closely related, but different domain using most commonly, adversarial training for feature learning domain-invariance. Such transformation is expected to be very useful for quite similar tasks with far fewer additional data requirements. But, finding and optimizing domain-specific features is often cumbersome. At a moderate to high expenditure, and medium data requirement, domain adaptation is a technique of highest relevance in transferring AI capabilities across adjacent disciplines.

8. Ensemble Methods

Ensemble models improve performance using multiple models, often involving techniques such as voting or averaging outputs. Thus, the accuracy and robustness of models can be achieved by making the approach ideal for complex tasks. Many high computational overheads coupled with complexity in the system are accompanying it. Ensemble techniques are thus very powerful, resource-consuming, and excellent techniques for enhancing the performance of an AI model considering the average to high data requirements along with high costs.

9. Multi-task Learning

Multi-task learning is the name given to training a single model to perform multiple related tasks synchronously, by sharing representations. This makes data use efficient and enhances performance on the tasks as learning is done jointly. However, handling task interference and priorities poses a challenge. Multi-task learning hence proves to be a cost-effective strategy with medium to high costs and medium to high data requirements exploiting synergies between related tasks.

10. Data Augmentation

The process of data augmentation generates resultantly transformed versions of already-existing training data or artificial training data and thus increases the existing training datasets. By this means, increase the number and variety of datasets resulting in robust and enhanced model performance. Thus, it needs to be modified so as not to get noise or bias to something otherwise. In the scenario of costs ranging from low to medium and low to average amounts of data, data augmentation is one very effective way of intensifying AI.

Conclusion

The glory days are here with the advent of domain-specific Large Language Models (LLMs). It is a combination of extreme specialization and very powerful computational capabilities that can bring about clear effects in a complete industry environmental conservation, for example, to worldwide commerce.

The pairing of domain experts with AI innovators will be critical in developing models that are superior both in technical accuracy and authentic relevance. This is the moment we are about to enter, that is going to herald a new stage of AI in which breadth and depth are seamlessly integrated, as universal and specialized models come together.

The future of domain-specific AI is indicative of tremendous promise ahead. Together, we can form this bright future and unlock the potential that can come with such customized AI solutions.

References

- Evalueserve. “The Ultimate Guide to Domain-Specific AI.” Retrieved from https://www.evalueserve.com/the-ultimate-guide-to-domain-specific-ai-ebook/.

- Design Bootcamp. “3 Ways to Tailor Foundation Language Models Like GPT for Your Business.” Retrieved from https://medium.com/design-bootcamp/3-ways-to-tailor-foundation-language-models-like-gpt-for-your-business-e68530a763bd/.

- Towards Data Science. “Specialized LLMs: ChatGPT, LaMDA, Galactica, Codex, Sparrow, and More.” Retrieved from https://towardsdatascience.com/specialized-llms-chatgpt-lamda-galactica-codex-sparrow-and-more-ccccdd9f666f/.

- Google Research. “Guiding Frozen Language Models with Learned Soft Prompts.” Retrieved from https://research.google/blog/guiding-frozen-language-models-with-learned-soft-prompts/https://research.google/blog/guiding-frozen-language-models-with-learned-soft-prompts/.

- IBM Research. “What Is AI Prompt Tuning?” Retrieved from https://research.ibm.com/blog/what-is-ai-prompt-tuning/.