Self-Improving AI Models effectively carry out many functions ranging from database building, data labeling, analysis, and code generation, to any text-based interaction. However, they mostly cannot validate the correctness of their responses, which results in inaccuracies and inconsistencies. IBM Research is looking into ways to enhance the Self-Improving features of LLMs to overcome such accuracy issues. Their work was shared during the Association of Computational Linguistics (ACL) conference held in Bangkok.

Table of Contents

Obstacles to Enhancing LLM Performance of Self-Improving AI Models:

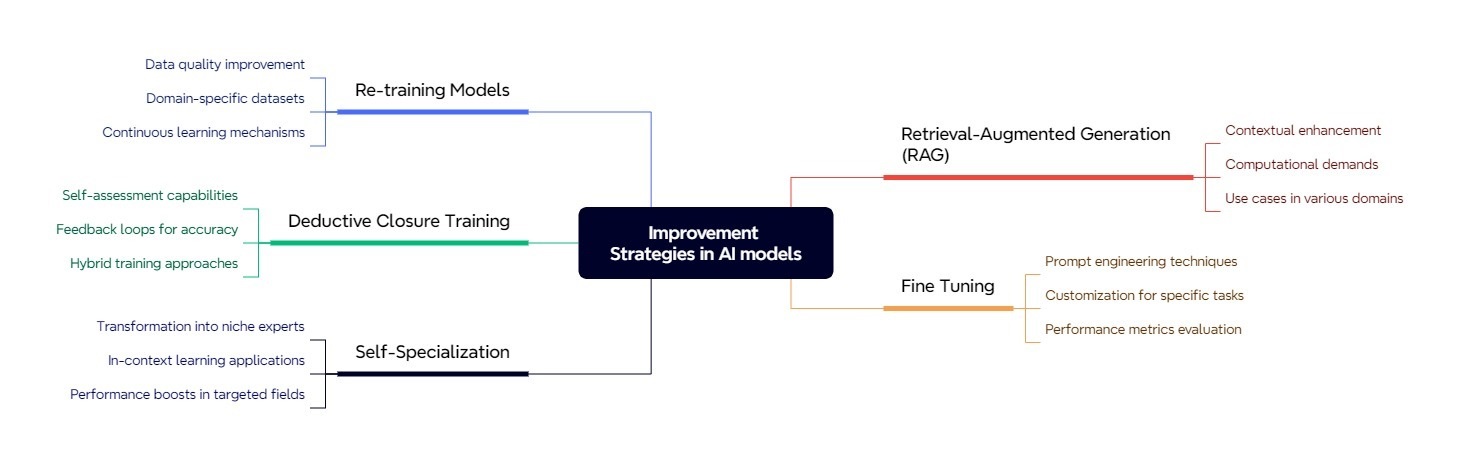

Working with crisp and exact facts such as specific historical dates or ethnic legal statutes, as well as domain-specific activities where precision is quite important, remains a challenge for Self-Improving AI Models. Currently, available solutions, such as retrieval-augmented generation (RAG), retraining models with better datasets, or even fine-tuning them with domain-specific materials, are costly and labor-intensive, defeating the cost-effectiveness of XAI capabilities.

Deductive Closure Training:

“Deductive closure training” is a technique devised by scientist Leshem Choshen and her associates from IBM to remedy the discrepancies present in Self-Improving AI Models, in which the model evaluates and corrects its own output errors based on its knowledge of training data.

As a first step, the model is asked to produce many statements that have some form of relation to the initially provided piece of information without considering whether they are true or false. After generating these statements, the model estimates how true those statements are and improves itself according to the estimation.

For instance, given the initial sentence, ‘The sky is green,’ the system might produce related sentences such as ‘Broccoli is the sky above green,’ and ‘The sky is blue.’ It then examines contradictions within the generated content, learning to correct itself, and is trained to prefer truth over falsehood.

This mechanism can be employed in a supervised manner or an unsupervised one. In the supervised implementation, the model is supplied with known, true seed statements. It was found that applying this method during experiments led to an increase in the accuracy of text generation by nearly 26%.

IBM Research is investigating self-specialization and “deductive closure training” in order to enhance large language model (LLM) performance without incurring extra computational cost. In particular, while deductive closure training allows models to self-evaluate the internal consistency of the outputs they produce, self-specialization is a technique that requires very little data to transform a generalist model into a specialist one. Both have been substantiated as increasing accuracy and performance on a number of topics more effectively than not using them.

Self-Specialization:

Another set of researchers from MIT and the MIT-IBM Watson AI Lab worked on “Self-Specialization” with the aim of refining how expert-level content is processed.

This is the process of turning an LLM into a subject-matter expert by providing the LLM with some seed material (small, focused inputs on a certain topic). For example, if the model that has been trained on a genetics dataset is tasked with writing about gene variants, it will be able to do so using the ‘hidden’ knowledge learned from the previous training.

In experiments carried out with Databricks’ MPT-30B model, the performance of self-specialized models was much higher than their baseline models on several datasets (e.g., biomedicine and finance). They were also compared to models such as Alpaca and Dromedary (on Meta’s LLaMA-65B), which were only slightly better.

Self-specialized models are efficient and can be added to the pre-trained model. They are not always active, so they help in reducing the unnecessary load on compute resources. Furthermore, these Self-Improving AI Models were able to perform better even on domains not originally specialized for them.

The researchers also proposed a technique that allowed a number of specialized models to be combined into one model. This amalgamated model demonstrated superior performance to both the base model and the specialized models in all the specialization topics.[0]

Recursive Self-Improvement (RSI):

Recursive Self-Improvement (RSI) is a process in which low or intermediate-level systems of AGI can improve on themselves autonomously, and this can go on ad infinitum, leading to superintelligence or an intelligence explosion.

Overall, the advent of RSI raises certain concerns about ethics and security, since these systems may develop out of control, surpassing the understanding and the control of humans.[1][2].

Seed Improver Concept:

“Seed improver” is the baseline architecture that endows any AGI with the basic abilities it will need to accomplish mankind-optimizing intelligence. In this paper, the term “Seed AI” describes a system which acts as a precursor to the more sophisticated types of Self-Improving AI Models of the future.

The seed improver possesses advanced skills that enable the AGI to strategize, develop content, assemble, debug, run any code, and verify its processes in order to maintain its capabilities.

Initial Architecture:

Envision an intelligent machine that can constantly learn and improve itself over time. This would be the central unit of our initial AGI framework. It would introduce various objectives for itself, such as being more knowledgeable, far beyond what it is currently, and work extremely hard to realize those ambitions. In order to give birth to this agent, a particular special “seed improver” is necessary with some key elements:

- First, the structure would include the recursive self-prompting loop. This would enable the agent to keep making new tasks for itself and to keep improving.

- Second, basic coding skills would be helpful for the agent in changing its code or even augmenting it.

- Third, there should be some mission to pursue, such as becoming more clever.

- Finally, there would be a need for mechanisms to assess and verify that it is making progress and that it is going in the right direction. These tests could even be developed by the agent as part of the learning process.

Impacts:

These strains of research focus on the remedial aspects of the limitations of Self-Improving AI Models by improving their precision and domain knowledge without substantially increasing their computational expenses.

Deductive closure training helps ensure factual consistency, and self-specialization allows for narrow and deep use of LLMs in certain areas while using very sparse labeled data. Combined, these approaches enhance the performance of LLMs for specific academic research and industry-level tasks.

Concluding Remarks:

The proposed inductive learning algorithm, self-specialization, and the concept of the seed improver drastically improve corporate learning management systems. They focus on learning corpora by restricting them to specific areas of learning, as well as the overall basic logic structure of the available corpora.

Moreover, under the process of deductive reasoning facilitated by the training, Self-Improving AI Models (LLMs) become more consistent, thereby enhancing their performance. Greater specialization associated with this approach makes it possible for LLMs to be carved up into much less data-demanding sub-parts, thereby enhancing the depth of performance in specific applications. Finally, the seed improver concept permits the realization of autonomous artificial general intelligence systems that can learn and optimize themselves on a larger scale, expanding possibilities for artificial intelligence research and development.

These progressions not only transform the way corpora are constructed but also present practical issues regarding how Self-Improving AI Models should or may be used. Necessary controls should be put in place to monitor and mitigate the dire implications that unfavorable users and the application of a given technology can have on society.

Resources:

- Hess, P. (2024, August 14). Teaching AI models to improve themselves. IBM Research Blog. https://research.ibm.com/blog/teaching-AI-models-to-improve-themselves

- Creighton, J. (2019, March 19). The unavoidable problem of self-improvement in AI: An interview with Ramana Kumar, Part 1. Future of Life Institute. https://futureoflife.org/ai/the-unavoidable-problem-of-self-improvement-in-ai-an-interview-with-ramana-kumar-part-1

- Zelikman, E., Lorch, E., Mackey, L., & Kalai, A. T. (2023, October 3). Self-taught optimizer (STOP): Recursively self-improving code generation [Preprint]. arXiv:2310.02304. [cs.CL]. https://arxiv.org/abs/2310.02304